OSL camera

The OSL Camera node is a scriptable node. You can create custom camera types for any purpose (such as VR warping) with OSL (Open Shader Language) scripts. It is a flexible camera used to match the rendering to the existing footage. One OSL Camera is one OSL compilation unit, which contains one shader, so it has one output attribute pin that connects to a Render Target node's Camera input pin. OSL is a standard created by Sony Imageworks. To learn about the generic OSL standard, information is provided from the OSL Readme and PDF documentation.

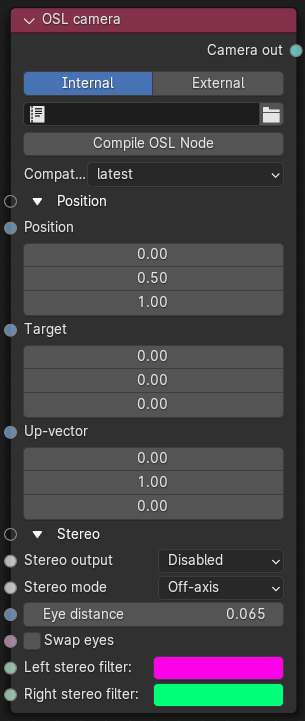

The inherent attributes of an OSL Camera node includes its position, target, up-vector (orientation) and stereo-related parameters. This means the moment you invoke an OSL Camera node, it supports Viewport controls, camera motion blur, and stereo rendering.

Figure 1: OSL camera Node

The customized OSL script is written into the OSL Camera node to create custom camera types. You have two options to load the script.

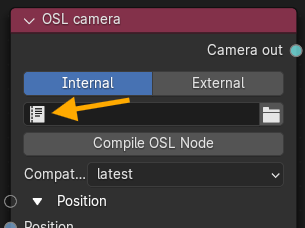

- Internal - Use the Text Editor icon to select a script written in Blender Text Editor (Figure 2).

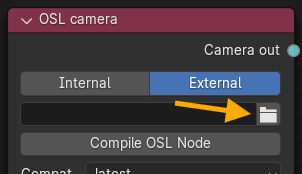

- External - Use the File Browser to load an OSL external text file (Figure 3).

Figure 2: Text Editor icon used to load an internal script

Figure 3: File Browser icon used to load an external script

Here is an initial OSL script:

Shader OslCamera (

output point pos = 0,

output vector dir = 0,

output float tMax = 1.0/0.0)

{

pos = P;

vector right = cross (I, N);

dir = I + right*(u-.5) + N*(v-.5);

}

The initial script’s declaration component includes the three required outputs presented as variables with output types point, vector, and float, respectively. Each OSL I/O type corresponds to an OctaneRender® attribute:

- point corresponds to a Projection attribute node (Box, Mesh, UV, Spherical, Cylindrical, etc.).

- vector“ corresponds to a Float attribute node (X, Y, Z).

- float“ corresponds to a Float attribute node (1D-value).

For a list of OSL variable declaration input/output types in the OSL Specification that OctaneRender® supports, refer to the Appendix topic in this manual on OSL Implementation in OctaneRender®.

The three required output variables in the initial script’s declaration represents a camera ray’s position, direction, and maximal depth. The initial script’s function body then initializes the position and orientation of the OSL Camera shader using OSL global variables P, I, and N, which defines any standard camera’s eye, direction, and up vectors, respectively. To further control the position and orientation of the camera shader, you have two options:

- Customize the script using OSL language global variables P, I, and N.

- Transform a point or a vector from camera space to world space.

You can create any camera type by customizing the script. Depending on the custom script, the resulting OSL shader may have more input type variables that appear as additional input pins on the OSL Camera node that represents it.

The OSL Camera Output Variables

The camera shader has three outputs representing a ray (note that the names are arbitrary):

point pos = Ray position:

This is often set to P, but it may be set to other points to implement depth of field, or a near clipping plane.

vector dir = Ray direction:

The render engine will take care of normalizing this vector if needed.

float tMax = Maximum ray tracing depth:

Measured along the direction of dir. May be used to implement a far clipping plane.

Set to 1.0/0.0 (infinity) to disable far clipping.

If tMax is 0, or if dir has 0 length, the returned ray is considered invalid, and the renderer will not perform any path tracing for this sample.

Accessing The OSL Camera Position

Like other camera types, OSL Camera nodes have static input pins that define the camera's position and orientation. It is not mandatory for your camera shader to use this position, but if it does, your camera supports motion blur and stereo rendering.

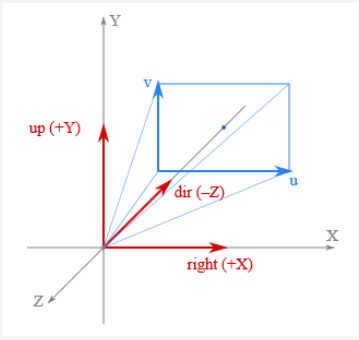

Figure 4: Camera position coordinates

Within camera shaders, the camera's position and orientation is available by the standard global variables defined by OSL:

point P: Camera position

vector I: Camera direction (sometimes called *forward*)

normal N: vector, perpendicular to I

float u, float v: Coordinates on the film plane, mapped to the unit square. (0, 0) is at the bottom-left corner. These coordinates can be fetched via getattribute("hit:uv", uv) and via the UV projection node.

Alternatively, the camera position is also available via the camera coordinate space. This is an orthonormal coordinate space. Without transform the camera is looking along the -Z axis with the +Y axis as up-vector, i.e. the axes are defined as:

+X: Right vector

+Y: Up vector

–Z: Camera direction

You can create your own custom Camera using an OSL camera node. As a starting point, below is a basic OSL implementation of a Thin Lens camera:

shader OslCamera(

float FocalLength = 1 [[ float min = 0.1, float max = 1000, float sliderexponent = 4]],

output point pos = 0,

output vector dir = 0,

output float tMax = 1.0/0.0)

{

float pa;

int res[2];

getattribute("camera:pixelaspect", pa);

getattribute("camera:resolution", res);

float u1 = 2 * (u - .5);

float v1 = 2 * (v - .5) * pa * res[1] / res[0];

pos = P;

vector right = cross(I, N);

dir = 2*FocalLength * I + v1 * N + u1 * right;

dir = transform("camera", "world", dir);

}

For a list of OSL variable declaration input/output types in the OSL Specification that OctaneRender® supports, refer to the Appendix topic on OSL Implementation in the OctaneRender® Standalone manual. To learn more about scripting within OctaneRender® using OSL, see the The Octane OSL Guide.

Parameters

- Internal - Use the Text Editor icon to select a script written in Blender Text Editor.

- External - Use the File Browser to load an OSL external text file.

- Compile OSL Node - Execute the script.

- Compatibility version - The Octane version that the behavior of this node should match.

- Position, Target, Up-vector - Camera position, target, and orientation in 3D space.

- Stereo Output - Format for the Stereo image.

- Stereo mode - Defines the Stereo image based on eye orientation.

- Eye distance - Defines the interocular distance between the stereo images. The value of 0.06m is close to the average human eyes.

- Swap eyes - Swap between the left and right stereo images.

- Left stereo filter - A color filter used for the left stereo image. Used with Anaglyph 3D glasses.

- Right stereo filter - A color filter used for the right stereo image. Used with Anaglyph 3D glasses.